Building Community-Specific FAIR Benchmarks: Insights from the OSTrails

In this joint blog post, John Shepherdson (Project Consultant, CESSDA ERIC) and Allyson Lister (FAIRsharing Coordinator - Content & Community, University of Oxford; and ELIXIR-UK) describe a recent workshop they co-led where community representatives began the creation of Benchmarks, to assist with FAIR-compliance, tailored to their specific requirements.

On Friday, November 28th, the OSTrails project hosted a hands-on workshop where representatives from diverse European organisations began to define what compliance to the Findable, Accessible, Interoperable, and Reusable (FAIR) Principles means to them. This hands-on session brought together representatives from participating organisations across multiple disciplines to tackle an important challenge in research data management—how to assess whether digital objects (e.g. datasets, software) truly meet the FAIR principles in ways that are meaningful for specific research communities. Effective assistance begins with an assessment.

Why Community-Specific FAIR Assessment Matters

While the FAIR principles provide a universal framework for research data stewardship, their implementation varies significantly across scientific domains. A genomics database has different requirements than a social science survey archive, and a climate model repository faces distinct challenges from a museum collection. This workshop recognised that one-size-fits-all assessment approaches miss the nuances that make data truly useful within specific research contexts. More broadly, this component of the OSTrails project stems from the documented issue that the same assessment and/or evaluation by different tools exhibits widely different results, due to the flexibility in the interpretation of the FAIR Principles and lack of transparency of the checks executed.

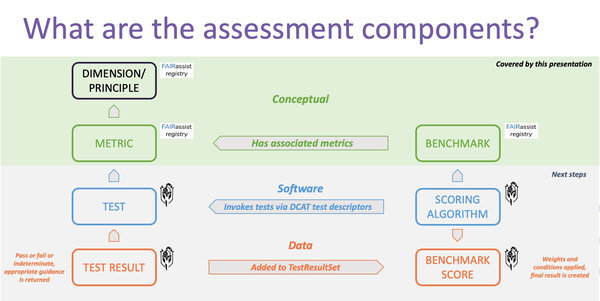

The components that need to be defined and implemented for a Benchmark quality assessment.

What We Accomplished

The workshop introduced participants to the FAIR Testing Interoperability Framework (Assess-IF), a structured approach that guides communities through creating tailored FAIR assessment frameworks that are designed to make transparent what is checked and how. It worked through two essential steps:

Step 1: Identifying specific requirements. Organisers began by describing why each community's unique FAIR requirements were key to ensuring a high level of FAIR alignment. Such community-specific FAIR definitions are created by articulating what standards (reporting requirements, terminologies, models, formats, identifier schema) matter most, which databases are essential, and how these apply to their specific digital objects. Participants were asked to express their requirements in narrative form, and these descriptions will form the foundation for the Conceptual components, detailed below, and the Software components that ultimately will execute the assessment.

Step 2: Defining Conceptual Components. Using pre-prepared templates, participants spent the remainder of the workshop defining FAIR Benchmarks1 and associated Metrics2 for their domains. For each of the FAIR principles, they determined which existing generic metrics could be used directly and which needed specialisation. They also provided practical guidance for data producers on how to address failures and identified example digital objects that would pass, fail, or return indeterminate results against the tests that implement the metrics.

The Road Ahead

The workshop was just the beginning. Our next steps include:

· Follow-up consultations with each pilot to refine their benchmark narratives

· Registration of metrics and benchmarks within FAIRassist, the registry for these components within FAIRsharing

· Test specification for any specialised metrics developed by the communities

· Implementation and deployment running these benchmark assessments with tools including FAIR Champion and FOOPS!

· Assessment and improvement of the Pilots’ Digital Object collections against their community-defined benchmarks.

From Assessment to Assistance: A Collaborative Path to Better Data

What made this workshop particularly valuable was the collaborative spirit. Participants weren't just passively learning—they were actively building assessment frameworks that will directly impact their communities' data practices. By the end of the session, each pilot had a concrete starting point: a narrative document describing their benchmark requirements and a roadmap for making their digital objects demonstrably FAIRer.

The goal of specialised definitions of FAIR extends beyond mere compliance. The assessment-IF is empowering data specialists and professionals to define their requirements to assess the FAIRness of digital objects in a way that is anchored to their needs and community norms, as well as being transparent. In turn, this framework will help assessment and/or evaluation tools to put community requirements into actions, ensuring that regardless the tool used, the results are consistent.

As the OSTrails project move forward with implementation, these community-defined benchmarks will serve as living documents—evolving alongside research practices and technologies while maintaining the core commitment to making data Findable, Accessible, Interoperable, and Reusable for those who need it most.

Learn more about the OSTrails project's National Pilots at https://ostrails.eu/national-pilots and the Thematic Pilots at https://ostrails.eu/thematic-pilots.

The slides from the workshop are available at DOI: https://doi.org/10.5281/zenodo.17804548

The FAIR Testing Resource Vocabulary can be found here: https://w3id.org/ftr/

See also: https://blog.fairsharing.org/?p=1076

1: Community-specific groupings of metrics. They provide a narrative describing how a community defines FAIR. Communities can choose the granularity of their benchmarks with regards to subject area and digital object type. Specifically, this means that benchmarks may be agnostic of object type or subject area, or may be scoped as tightly as required by the benchmark authors. Source: OSTrails Architecture (https://docs.ostrails.eu/en/latest/architecture/fair_if.html)

2: Metric: Narrative description that a Test must wholly implement. Each metric should implement exactly one dimension (e.g. one sub-principle from the FAIR Principles). Metrics may be domain-agnostic or not. Source: OSTrails Architecture (https://docs.ostrails.eu/en/latest/architecture/fair_if.html)